Ensuring high-quality information has always been a challenge I’ve taken seriously. The idea that flawed input leads to flawed output—what we often called “garbage in, garbage out”—still holds true today. This issue goes all the way back to the 1950s and 1960s, and it remains just as relevant. When I first started thinking seriously about this, I kept coming back to one simple truth: you can do no better than the quality of your data.

Over the years, the terminology has evolved, but the core message is unchanged: Artificial intelligence is only as good as the data behind it. That’s why I focus on how data quality and context for AI enhance outcomes through integrated strategies—because without that foundation, even the best systems will fail to deliver.

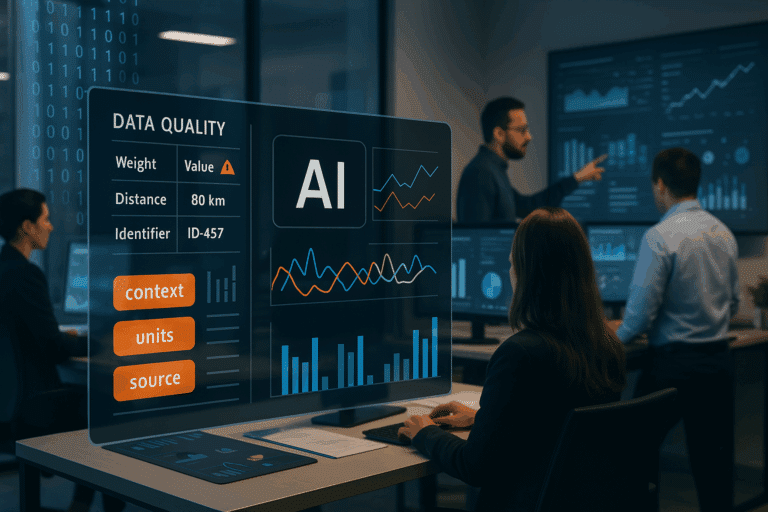

Today, as AI drives critical decisions, high-quality, contextually accurate data is more essential than ever. I’ve seen how easily things can go wrong. An AI model analyzing supply chain logistics will fail if half its data records distances in miles and the other half in kilometers. Similarly, a fraud detection system trained on incomplete financial data may miss key risks. To prevent failures like these, we must standardize data, embed contextual metadata, maintain semantic consistency, validate inputs continuously, and integrate domain-specific knowledge. Without these steps, even the most advanced AI systems risk producing misleading results.

Key Takeaways:

- AI performance is only as strong as the data quality and context alignment.

- Poor data quality leads to incorrect, biased, and unreliable AI outputs.

- Clear context enhances AI by removing ambiguous and irrelevant data, ensuring consistency, and improving decision accuracy.

- Organizations must implement strict data governance, contextual metadata integration, and continuous monitoring to maintain AI integrity.

- Investing in best data practices ensures AI models deliver trustworthy, actionable insights.

Keep reading to explore how data quality challenges have persisted over time and why historical lessons remain critical in today’s AI-driven world.

A Historical Perspective on Data Quality

Data quality issues aren’t new. In the 1950s and 1960s, early computer systems failed due to inconsistent inputs—like weights in pounds and kilograms—leading to critical errors. This gave rise to the phrase “garbage in, garbage out.”

That principle still holds. Today’s AI systems face similar challenges: mixed formats, missing context, and inconsistent data structures. Organizations on average lose around $12.9 million annually due to poor data quality, and data accuracy has dropped nearly 9% since 2021, posing serious risks to AI development.

These patterns show that data quality and context for AI are essential. AI doesn’t fix bad data—it amplifies its risk and cost.

AI at Scale vs. Underlying Data Quality

Today, organizations invest heavily in AI to enhance efficiency and automate decision-making, but many still overlook a foundational truth: data quality and context for AI matter more than any algorithm. No matter how sophisticated the model, it can’t fix bad data.

There is a federal agency that deployed an AI-powered risk assessment tool. It was supposed to identify potential threats, but the results were off. Routine business trips were flagged as risks, while real issues went unnoticed. Why? The training data lacked context. Similarly, I saw a financial institution implement an AI-driven fraud detection system that ended up flagging legitimate corporate transactions. The system couldn’t distinguish corporate spending from personal purchases. These cases highlight a fundamental truth—scaling AI without addressing underlying data challenges leads to inefficiencies and costly errors; indeed, more than two-thirds (68%) of Chief Data Officers (CDOs) cited data quality as their top challenge, hindering AI outcomes.

The Crucial Role of Context in Data Quality

Accuracy alone doesn’t make data useful—context gives it meaning. Context includes units, definitions, and domain-specific usage that help AI interpret the data correctly.

I once tested a sensor that recorded weight, but the data was erratic. After riding with the collection team, I learned users were allowed to enter their own weight—without specifying if it was in pounds or kilograms. That small oversight made the data nearly useless.

To avoid these pitfalls, organizations must standardize definitions, embed contextual metadata, and cross-reference inputs. These steps aren’t optional—they’re essential to producing trustworthy, high-performing AI systems.

Implications for Modern AI Applications

The promise of AI is enormous, but so are the risks when data isn’t handled properly. In my experience, flawed data has led to security vulnerabilities in government systems and reputational damage in private firms. Poor data quality and context for AI can result in misinterpretations that ripple across operations.

I’ve even seen this play out at the leadership level. Former military officers transitioning into the private sector often assume their experience will translate directly. But they underestimate the complexity of things like financial responsibility or competitive agility. Just as military and corporate settings require different approaches, AI doesn’t just need data—it needs data that makes sense in its environment. Without clear context tied to each domain, even the smartest models will make flawed decisions. Subject matter expertise is critical to well-informed design and successful implementation.

Data Quality and Context for AI Solutions: Strategies to Enhance AI Performance

Ensuring reliable AI outcomes requires strong governance, data enrichment, and continuous monitoring. Strict data governance policies help prevent inconsistencies that lead to flawed AI informed decisions. I’ve worked with teams that didn’t align on basic data definitions—and the results were a mess. That’s why clear data labeling, automated validation tools, and cross-functional collaboration are so important.

Context is just as critical. Embedding contextual metadata lets AI distinguish between terms that sound alike but mean very different things. I recommend domain-specific classification, geospatial tagging, and linguistic filters to avoid misclassification and bias.

Even with governance and enrichment, AI must be continuously monitored to sustain data quality and context for AI over time. Regular audits, real-time monitoring, and user feedback loops help AI adapt to evolving data landscapes. With a company like KaDSci, organizations can ensure AI integrity, preventing costly errors and inefficiencies.

The Data Backbone of Reliable AI

AI’s accuracy depends on data quality and context for AI. Without structured, consistent data, even the most advanced AI can misinterpret information. A financial institution’s AI once flagged legitimate transactions as fraud while missing real threats—all due to poor data quality and context for AI. To fully harness Artificial Intelligence, organizations must prioritize data governance, integrate contextual metadata, and maintain continuous monitoring. Trustworthy AI starts with high-quality, context-rich data.

KaDSci helps organizations navigate this challenge by implementing proven data strategies that energize your data and optimize AI performance. Whether you’re refining existing systems or integrating AI-driven solutions, our expertise ensures that your AI models operate with precision, reliability, and strategic effectiveness. Contact KaDSci today to explore how we can help you achieve data-driven success with Artificial Intelligence.

How Does Poor Data Quality Impact AI Model Training?

Poor data quality leads to biased predictions and unreliable outputs. Inconsistent or incomplete data can cause AI to misinterpret inputs, make flawed decisions, or fail in automation. Ensuring structured, high-quality data with contextual accuracy is essential for AI reliability.

What Are the Best Ways to Improve Data Context for AI?

Improving data reliability requires contextual metadata, standardized data entry, and semantic tagging. Organizations should use real-time validation and cross-referencing to enhance AI’s ability to interpret data accurately, leading to better decision-making and more reliable insights.

How Can Businesses Ensure Long-Term Data Integrity for AI Systems?

Sustaining data quality and context for AI requires continuous monitoring, regular audits, and automated validation tools. Businesses should implement anomaly detection, feedback loops, and governance policies to prevent data drift and maintain AI accuracy over time.